Introduction

Its potential to effectively manage a extensive range of image-based totally tasks has made it a precious asset for researchers, builders, and industry specialists. To fully leverage its talents and optimize venture outcomes, knowledge how to successfully use this model is critical.

This manual aims to offer an in depth walkthrough for users trying to incorporate big_vision/flexivit/flexivit_s_i1k.Npz into their projects. We will discover key steps, such as putting in place the development surroundings, getting ready and processing data, training the model, and evaluating its performance. By following this in-depth guide, users can maximize the model’s ability and enhance their image processing and analytical workflows.

With its robust capabilities and green processing, the big_vision/flexivit/flexivit_s_i1k.Npz model empowers users to address complicated visual tasks with improved precision and effectiveness. This resource is designed to help each seasoned professionals and learners harness the strength of superior pc imaginative and prescient strategies, pushing the boundaries of what is viable in photo evaluation and system gaining knowledge of initiatives.

Understanding big_vision/flexivit/flexivit_s_i1k.npz

The big_vision/flexivit/flexivit_s_i1k.npz model represents a significant innovation in computer vision and machine learning, standing out for its versatility and efficiency across a range of image-processing tasks. This model has garnered attention for its unique ability to adapt to different patch sizes, making it a powerful tool for both research and practical applications.

Understanding FlexiViT

The design of big_vision/flexivit/flexivit_s_i1k.npz, known as FlexiViT, is a kind of Vision Transformer (ViT) that permits patch size flexibility. Unlike traditional ViTs, which are typically fixed to a single patch size, FlexiViT enables the model to handle varying patch sizes during training, leading to a single set of weights that perform efficiently across multiple scales. This capability supports enhanced transfer learning and pre-training, which benefits developers and researchers working on diverse computer vision tasks.

Core Advantages of FlexiViT

FlexiViT’s standout features include:

- Patch Size Flexibility: The model can manage patch sizes ranging from 8×8 to 48×48 pixels without sacrificing performance. This adaptability makes it suitable for different computational budgets.

- Resource-Efficient Transfer Learning: FlexiViT optimizes memory and compute usage during transfer learning, enabling fine-tuning with larger patches and deploying with smaller patches for effective performance.

- Comparable Performance: When compared to fixed-patch ViTs, FlexiViT demonstrates comparable or superior performance in many scenarios, even with diverse patch sizes.

- Adaptable Computation: The model’s design allows for a trade-off between computation and prediction accuracy, making it suitable for a variety of hardware capabilities.

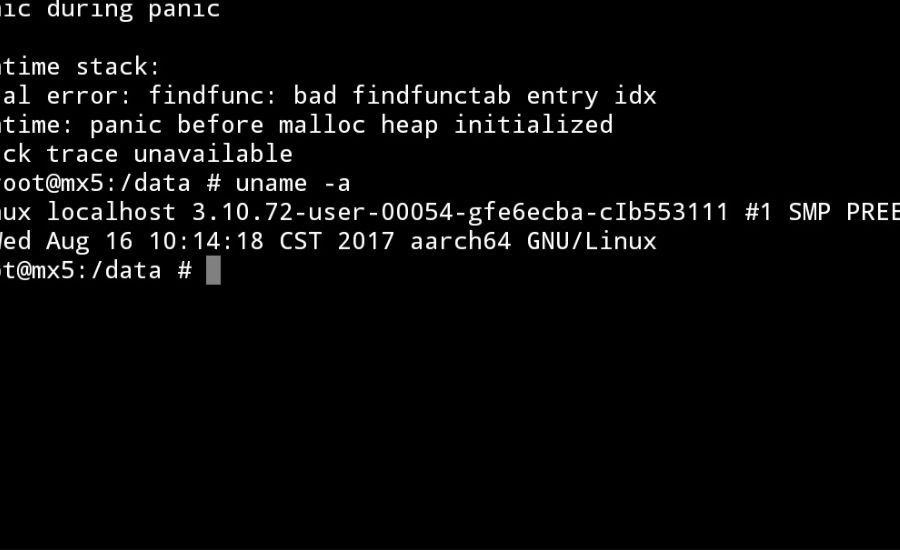

Setup and Installation

To utilize big_vision/flexivit/flexivit_s_i1k.npz, setting up the environment is critical. The installation involves ensuring dependencies are in place and configuring your development space for optimal performance. Here’s a step-by-step guide:

- Install Required Libraries: Ensure you have tensorflow, tensorflow_datasets, and other relevant libraries installed.

- Download Datasets: Use tensorflow_datasets to acquire and preprocess datasets. For larger datasets like ImageNet, it’s recommended to prepare them ahead of time to streamline the process.

- Storage Considerations: For faster data access, use efficient formats like TFRecord or LMDB, which reduce data loading times and improve training efficiency.

Loading and Preprocessing Data

The preprocessing phase impacts the model’s performance significantly. Here’s how to handle data effectively:

- Supported Formats: FlexiViT can process common image formats like JPEG, PNG, and TIFF. Convert these to numpy arrays or PyTorch tensors for optimal processing.

- Data Augmentation: Enhance the model’s robustness with techniques such as:

- Random cropping aids in the model’s ability to generalize across various image segments.

- Horizontal Flipping: Increases data diversity by simulating mirrored images.

- Color Jittering: Randomly adjusts color properties to mimic lighting variations.

- Rotation and Zoom: Improves object recognition by simulating different perspectives and scales.

Initializing the Model

Initialization sets the stage for training. FlexiViT benefits from pre-trained weights, typically sourced from a strong ViT teacher, which can lead to superior performance. Training should account for the range of patch sizes (8×8 to 48×48 pixels) to maintain model flexibility and adaptability.

Fine-Tuning and Optimization

Fine-tuning FlexiViT requires strategic approaches to harness its full potential:

- Patch Size Curriculum: Train with a progressive patch size approach for resource-efficient fine-tuning, starting with larger sizes and transitioning to smaller ones.

- Compute-Adaptive Transfer: Optimize training by using large patch sizes to save memory and compute, then deploy with smaller patch sizes for enhanced performance.

- Hyperparameter Tuning: Employ techniques like nested cross-validation to select optimal parameters, ensuring a balance between computational expense and model performance improvements.

Model Performance Evaluation

Assessing big_vision/flexivit/flexivit_s_i1k.npz involves using various evaluation metrics:

- Intersection over Union (IoU): Measures the overlap between predicted and actual bounding boxes, crucial for object localization tasks.

- Average Precision (AP): Summarizes precision and recall for a given task.

- Mean Average Precision (mAP): Computes AP across multiple classes for comprehensive evaluation.

- Precision and Recall: Assess the proportion of true positive predictions, with precision focusing on avoiding false positives and recall on detecting all true positives.

- F1 Score: Balances precision and recall to offer an overall performance metric.

Visualizing Results

Visualization aids in interpreting model performance:

- F1 Score and Precision-Recall Curves: Illustrate the balance between precision and recall at different thresholds.

- Confusion Matrix: Provides insight into true positives, false positives, true negatives, and false negatives.

- Normalized Confusion Matrix: Helps compare model performance across classes.

- Validation Batch Visuals: Shows ground truth vs. predictions for easy evaluation.

Setting Up and Using FlexiViT with TensorFlow Datasets (TFDS)

FlexiViT is an advanced Vision Transformer model capable of working with varying patch sizes, making it adaptable for a range of image processing tasks. It’s built to handle tasks efficiently, whether training or fine-tuning, with support for models like big_vision/flexivit/flexivit_s_i1k.npz.

Installing TensorFlow Datasets (TFDS)

FlexiViT relies on TensorFlow Datasets (TFDS) for dataset management to enhance reproducibility and streamline the data preparation process. To set up the necessary datasets, use the following command:

Initial Model Setup and Training

Training FlexiViT effectively begins with loading pre-trained weights, which lay the groundwork for improved training outcomes and support its unique flexibility in patch size selection. This adaptability helps cater to various computational capabilities and can be leveraged during both training and deployment.

Fine-Tuning Process: Fine-tuning involves customizing the model for specific tasks. Start with larger patch sizes to fine-tune, then switch to smaller ones for deployment, balancing performance and resource efficiency. This approach allows for continued adaptability, maintaining flexibility even after fine-tuning.

Hyperparameter Tuning: To extract the best performance from FlexiViT, consider hyperparameter optimization techniques like cross-validation. This ensures that the model generalizes well to unseen data while optimizing for a balance between performance and computational cost.

Model Evaluation

FlexiViT’s effectiveness is assessed using standard metrics such as Mean Average Precision (mAP), Average Precision (AP), and Intersection over Union (IoU). These metrics evaluate both classification accuracy and object localization. Additional measures include Precision, Recall, and the F1 Score, which collectively offer insights into how well the model detects true instances, avoids false positives, and balances precision and recall.

Visualizing Performance: Employ visual tools like Precision-Recall curves and confusion matrices for a comprehensive understanding of model performance at different thresholds. This helps identify areas of improvement and adapt strategies accordingly.

Comparing FlexiViT to Baseline Models

FlexiViT has shown to outperform conventional Vision Transformer models when evaluated across patch sizes not used during initial training. This makes it especially valuable in tasks requiring quick adaptation and efficient computation. The ability to fine-tune with larger patch sizes and deploy with smaller ones ensures resource-efficient learning, making it ideal for limited-resource environments.

Key Features of flexivit_s_i1k.npz

The model file flexivit_s_i1k.npz includes pre-trained FlexiViT weights and highlights several advantages:

- Adaptive to Multiple Patch Sizes: FlexiViT accommodates different patch sizes from 8×8 to 48×48 pixels without sacrificing performance.

- Resource-Efficient Transfer Learning: By adjusting input grid sizes, FlexiViT supports efficient transfer learning, reducing computational costs.

- Versatile and Scalable: Pre-trained models exhibit comparable performance to fixed-patch ViTs, enabling easy transfer to new tasks.

- Cost-Effective Pre-training: One model variant can be used across different patch sizes, minimizing pre-training expenses.

Advantages Over Traditional Vision Models

FlexiViT provides significant benefits:

- Enhanced Versatility: Unlike fixed-patch Vision Transformers, FlexiViT excels across various patch sizes, making it adaptable for different tasks and computational constraints.

- Improved Resource Management: FlexiViT’s flexible nature allows users to optimize training with larger patches while deploying smaller ones, balancing speed and performance.

- High-Performance Benchmarks: FlexiViT matches or exceeds traditional Vision Transformers, especially in cross-patch evaluations, underscoring its adaptability and performance.

- Quick Adaptation: The model’s built-in flexibility allows for seamless task adaptation with minimal retraining.

Environment Setup Guide

To maximize the performance of big_vision/flexivit/flexivit_s_i1k.npz, setting up your environment correctly is crucial.

1. Install Dependencies

Ensure that your development environment includes Python, TensorFlow, and libraries such as NumPy. Check the model’s documentation for specific versions and use pip or conda for installation. This step helps prevent errors during model training and usage.

2. Organize Your Workspace

Set up an organized workspace with designated folders for data, model weights, and results. Proper data management supports efficient workflow and makes resources easy to access. Confirm that your system (CPU/GPU) meets the model’s requirements for optimal performance.

3. Integrate Model Components

Place flexivit_s_i1k.npz containing the pre-trained model weights in the appropriate directory and update your script to point to its path. This ensures the model can be initialized and utilized properly.

4. Confirm Environment Compatibility

Run a preliminary test by importing the required libraries and loading a sample input through the model. This step checks for any compatibility issues before diving into more extensive training or testing.

Evaluating Model Performance Metrics

For a comprehensive assessment, use metrics such as IoU and AP. IoU quantifies the overlap between predicted and true bounding boxes, essential for object localization. To get a comprehensive picture of model accuracy and recall, AP computes the area under the precision-recall curve. For multi-class evaluation, mAP provides average AP scores across all classes.

Precision measures true positives relative to all positive predictions, while Recall measures true positives relative to all actual positives. The F1 Score combines precision and recall for a balanced performance evaluation.

Visual Analysis Tools

Visualizing model results enhances interpretation:

- F1 Score Curve: Shows performance balance at various thresholds.

- Precision-Recall Curve: Demonstrates trade-offs between precision and recall.

- Confusion Matrix: Provides a detailed breakdown of true/false positives and negatives per class.

Comparing FlexiViT with Standard Models

FlexiViT excels in comparison with traditional fixed-patch Vision Transformers, demonstrating adaptability in performance across different patch sizes. Its ability to conduct resource-efficient transfer learning with a larger patch size during fine-tuning and deploy with smaller ones makes it an attractive choice, particularly for resource-constrained scenarios.

FlexiViT’s versatility remains even after fine-tuning, enabling further flexibility for new tasks without requiring retraining. This makes it a powerful and adaptable choice for modern image processing tasks, combining high performance with resource efficiency.

Facts:

- Model Overview:

- big_vision/flexivit/flexivit_s_i1k.npz is a powerful and flexible model in computer vision and machine learning.

- It is based on FlexiViT, a Vision Transformer variant designed to handle variable patch sizes (from 8×8 to 48×48 pixels) efficiently.

- Core Features:

- Patch Size Flexibility: Can adapt to varying patch sizes, which aids in efficient training and transfer learning.

- Resource Efficiency: Optimizes memory and computational resources, supporting fine-tuning with larger patches and deploying with smaller ones.

- Comparable Performance: Performs similarly or better than fixed-patch Vision Transformers, especially with different patch sizes.

- Installation and Environment Setup:

- Required libraries include TensorFlow and TensorFlow Datasets (TFDS).

- Datasets should be downloaded using big_vision.tools.download_tfds_datasets or placed manually in ~/tensorflow_datasets/downloads/manual/.

- Data Preparation and Preprocessing:

- Supports formats like JPEG, PNG, and TIFF.

- Recommended data augmentation techniques include random cropping, horizontal flipping, color jittering, and rotation/zoom.

- Model Training and Fine-Tuning:

- Start with larger patch sizes for fine-tuning and shift to smaller patches for deployment.

- Hyperparameter tuning using methods like cross-validation can optimize performance.

- Evaluation Metrics:

- Common metrics include Intersection over Union (IoU), Average Precision (AP), Mean Average Precision (mAP), Precision, Recall, and F1 Score.

- Visualization tools include Precision-Recall curves and confusion matrices.

- Performance Comparison:

- FlexiViT outperforms or matches traditional Vision Transformers, particularly across multiple patch sizes.

- Adaptable for resource-constrained environments, offering better resource management and quick adaptation.

Summary:

The big_vision/flexivit/flexivit_s_i1k.npz model, based on the FlexiViT architecture, is a significant advancement in computer vision. Unlike traditional Vision Transformers that rely on a fixed patch size, FlexiViT supports varying patch sizes (8×8 to 48×48 pixels), enabling it to perform well across a range of tasks and hardware configurations. This model is resource-efficient, optimizing training with larger patches and deploying with smaller ones, which makes it suitable for scenarios with limited computational power.

The setup process involves installing TensorFlow and TFDS, preparing data using common formats, and applying data augmentation techniques. For training, it’s recommended to use pre-trained weights and fine-tune the model using a progressive patch size approach. Hyperparameter optimization ensures the best performance, with evaluation relying on metrics like IoU, AP, mAP, and the F1 Score, visualized through curves and confusion matrices.

FlexiViT’s ability to adapt to various patch sizes and its effectiveness in different environments give it an edge over traditional Vision Transformers, making it a robust choice for modern image-processing tasks.

For more Information About blog visit Shortthink

Leave a Reply