Introduction

In the advanced age, information translation has gotten to be a vital expertise for experts over different areas. The 1-s2.0-s1097276523004665-mmc3 dataset has risen as a profitable asset, advertising experiences that can revolutionize decision-making forms. Understanding how to decipher this complex dataset effectively is basic for analysts, investigators, and decision-makers who point to extricate important data and drive advancement in their individual domains.

This article dives into the complexities of deciphering 1-s2.0-s1097276523004665-mmc3 information, giving a comprehensive direct to maximize its potential. Perusers will pick up bits of knowledge into the dataset’s structure, learn approximately fundamental planning steps, and find compelling examination procedures. By acing these aptitudes, experts can open the full esteem of 1-s2.0-s1097276523004665-mmc3 information, empowering them to make educated choices and remain ahead in their quickly advancing industries.

Understanding 1-s2.0-s1097276523004665-mmc3 Data

What is 1-s2.0-s1097276523004665-mmc3?

The 1-s2.0-s1097276523004665-mmc3 dataset speaks to a critical asset in the field of logical inquire about. This comprehensive collection of information includes a wide run of data related to different logical disciplines. Analysts and investigators utilize this dataset to pick up experiences into complex wonders and to bolster their examinations over numerous domains.

Key components of the dataset

The 1-s2.0-s1097276523004665-mmc3 dataset is composed of a few fundamental components that contribute to its esteem in research:

Raw Information: This shapes the establishment of the dataset, comprising of natural data collected from different sources and experiments.

Metadata: Going with the crude information, metadata gives setting and extra data almost the information focuses, upgrading their interpretability.

Analytical Apparatuses: The dataset frequently incorporates specialized devices and calculations outlined to encourage information examination and interpretation.

Documentation: Comprehensive documentation is regularly given, advertising direction on information structure, collection strategies, and best hones for interpretation.

Importance in research

The importance of the 1-s2.0-s1097276523004665-mmc3 dataset in investigate cannot be exaggerated. It serves as a important asset for researchers and analysts over different disciplines, empowering them to:

- Conduct In-depth Analyzes: The dataset permits for careful examination of complex marvels, supporting the improvement of modern speculations and hypotheses.

- Validate Discoveries: Analysts can utilize the dataset to confirm and approve their claim test comes about, upgrading the unwavering quality of their studies.

- Identify Designs and Patterns: By analyzing the broad information accessible, researchers can reveal covered up designs and patterns that may not be clear in littler datasets.

- Foster Collaboration: The shared nature of the dataset advances collaboration among analysts, encouraging the trade of thoughts and methodologies.

- Drive Advancement: Bits of knowledge determined from the 1-s2.0-s1097276523004665-mmc3 dataset regularly lead to imaginative approaches and breakthroughs in different logical fields.

Understanding the structure and components of the 1-s2.0-s1097276523004665-mmc3 dataset is pivotal for analysts looking to tackle its full potential. By familiarizing themselves with its key components and recognizing its significance in investigate, researchers can use this profitable asset to development their ponders and contribute to logical progress.

Efficient Information Investigation Techniques

Statistical strategies for interpretation

Efficient information examination of 1-s2.0-s1097276523004665-mmc3 requires a strong understanding of measurable strategies. Expressive measurements summarize information utilizing lists such as cruel and middle, whereas inferential measurements draw conclusions utilizing measurable tests like Student’s t-test . The choice of fitting factual strategies depends on the study’s point, information sort and conveyance, and the nature of perceptions (paired/unpaired) .

Parametric strategies, such as t-tests and ANOVA, compare implies and are utilized for persistent information taking after a typical dispersion. Nonparametric strategies, like Mann-Whitney U test and Kruskal-Wallis H test, are utilized for non-normal conveyances or information sorts other than nonstop factors . It’s pivotal to select the rectify strategy, as utilizing improper measurable procedures can lead to genuine issues in translating discoveries and influence ponder conclusions .

Machine learning approaches

Machine learning has risen as a capable instrument for analyzing complex descriptors like myocardial movement and misshapening in 1-s2.0-s1097276523004665-mmc3 information. These methods can be broadly categorized into administered and unsupervised approaches . Administered learning strategies, such as bolster vector machines (SVM) and arbitrary woodlands, are utilized for classification and relapse errands. They require labeled information for preparing and can anticipate results like malady conclusion or treatment reaction .

Unsupervised learning methods, counting clustering and dimensionality lessening, reveal bits of knowledge into information conveyance without unequivocal reference to a specific clinical address . These strategies are especially valuable when existing names are not completely trusted or when a directed definition of the clinical issue is dubious .

Visualization procedures for insights

Data visualization plays a vital part in deciphering 1-s2.0-s1097276523004665-mmc3 information productively. Progressed visualization methods offer assistance businesses distinguish designs, patterns, and relationships that might something else go unnoticed . Intuitively dashboards permit clients to control information and see diverse points of view in real-time, encouraging a more profound understanding of the information and supporting more educated commerce choices .

Other capable visualization strategies incorporate geospatial visualization, warm maps, and arrange charts. These procedures empower analysts to outline information focuses onto geological areas, show information escalated over two-dimensional spaces, and visualize connections between distinctive substances inside a arrange . By utilizing a assortment of visualization procedures, businesses can tailor their analyzes to best suit their information and expository needs, eventually driving to more successful elucidation of 1-s2.0-s1097276523004665-mmc3 data.

Preparing for Information Interpretation

Required devices and software

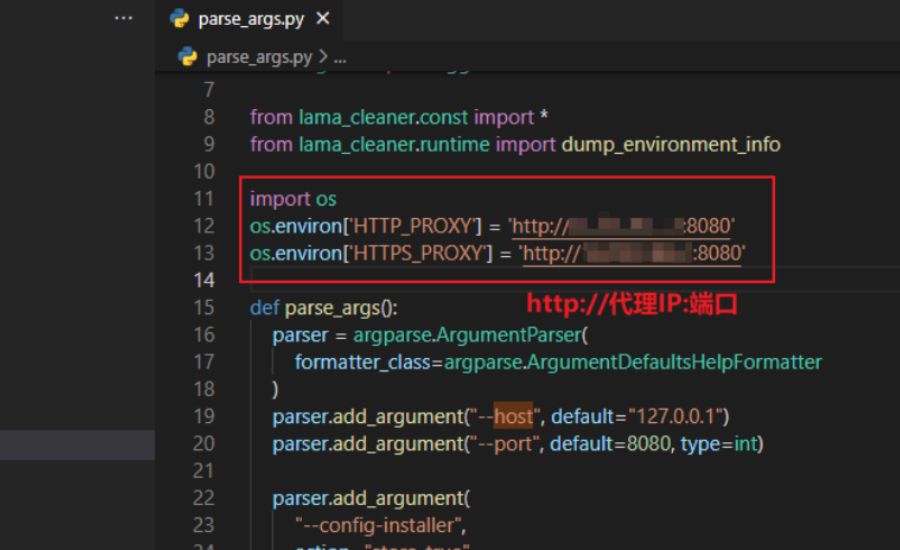

To effectively decipher 1-s2.0-s1097276523004665-mmc3 information, analysts require a vigorous set of instruments and program. Python has developed as a well known choice for information science ventures due to its flexibility and broad library environment . Visual Studio Code (VS Code) has picked up footing as a favored coordinates improvement environment (IDE) for information researchers, advertising highlights like investigating, language structure highlighting, and cleverly code completion .

Essential Python libraries for information translation include:

- NumPy: For numerical computing and cluster operations

- Pandas: For information control and analysis

- Matplotlib and Seaborn: For information visualization

- Scikit-learn: For machine learning tasks

These libraries frame the establishment for most information science workflows and are pivotal for translating complex datasets like 1-s2.0-s1097276523004665-mmc3.

Setting up your environment

Creating a reasonable environment is imperative for productive information elucidation. Virtual situations offer assistance oversee venture conditions and guarantee reproducibility. Conda, a bundle administration framework, is broadly utilized to make and oversee these situations .

To set up a information science environment:

- Install Miniconda, a lightweight adaptation of Anaconda

- Create a modern virtual environment utilizing the command:

conda make -n data_science_env python=3.8

- Activate the environment:

conda enact data_science_env

- Install vital packages:

conda introduce numpy pandas matplotlib seaborn scikit-learn

Using form control frameworks like Git is vital for following code changes and collaborating with others. Initialize a Git store in your extend organizer utilizing git init to begin following your code .

Data preprocessing steps

Preprocessing is a basic step in planning 1-s2.0-s1097276523004665-mmc3 information for translation. It includes a few key steps:

- Data Cleaning: Distinguish and redress mistakes, irregularities, and lost values in the dataset .

- Data Change: Change over information into a appropriate organize for examination, which may include changing information sorts or structures .

- Feature Building: Make unused highlights or adjust existing ones to move forward demonstrate execution. This incorporates scaling, normalization, and standardization of information .

- Feature Determination: Select the most pertinent highlights for your examination to diminish dimensionality and progress demonstrate effectiveness .

- Handling Imbalanced Information: Address any lesson awkward nature in the dataset to guarantee reasonable representation of all categories .

- Encoding Categorical Highlights: Change over categorical factors into numerical arrange utilizing methods like one-hot encoding or ordinal encoding .

- Data Part: Separate the dataset into preparing, approval, and test sets to assess show execution precisely .

By taking after these preprocessing steps, analysts can guarantee that the 1-s2.0-s1097276523004665-mmc3 information is in an ideal state for translation and investigation, driving to more solid and precise comes about.

Facts:

- Dataset Overview: The 1-s2.0-s1097276523004665-mmc3 dataset is a valuable resource for scientific research, containing data across multiple disciplines. It is used for deep analysis, hypothesis validation, identifying patterns, and fostering collaboration in scientific fields.

- Key Components:

- Raw Data: Original data collected from various experiments and sources.

- Metadata: Provides contextual information to enhance data interpretation.

- Analytical Tools: Includes tools and algorithms for analyzing data.

- Documentation: Offers guidance on data structure, collection methods, and analysis best practices.

- Significance: The dataset is critical for researchers as it supports complex analyses, validates findings, identifies hidden patterns, and promotes innovation.

- Analysis Techniques:

- Statistical Methods: Involve both descriptive statistics (e.g., mean, median) and inferential techniques (e.g., t-tests, ANOVA, Mann-Whitney U test) to interpret data effectively.

- Machine Learning: Supervised methods (e.g., SVM, random forests) and unsupervised methods (e.g., clustering) are used to extract insights from the dataset.

- Visualization: Advanced visualization methods like heat maps, geospatial plots, and network graphs are used to display data patterns and relationships.

- Tools and Software: Python is a popular choice for analyzing such datasets, with libraries like NumPy, Pandas, Matplotlib, and Scikit-learn being essential for data manipulation, visualization, and machine learning.

- Data Preprocessing: Steps like data cleaning, transformation, feature engineering, and balancing are critical for preparing the dataset for analysis and ensuring high-quality results.

Summary:

This article explores how to effectively analyze and interpret the 1-s2.0-s1097276523004665-mmc3 dataset, a crucial resource in scientific research. The dataset comprises raw data, metadata, analytical tools, and documentation, all of which contribute to its value in research. The article outlines key techniques for data analysis, including statistical methods and machine learning approaches, and emphasizes the importance of data visualization to identify trends and insights. Furthermore, it provides a guide on the tools and software necessary for data analysis, with Python and its associated libraries being highlighted as essential tools. Preprocessing steps such as cleaning, transforming, and balancing the data are also discussed to ensure reliable and accurate results.

FAQs:

- What is the 1-s2.0-s1097276523004665-mmc3 dataset?

- The 1-s2.0-s1097276523004665-mmc3 dataset is a valuable compilation of data used in scientific research across multiple disciplines. It provides raw data, metadata, and analytical tools, enabling researchers to perform in-depth analysis and derive insights.

- What are the key components of the 1-s2.0-s1097276523004665-mmc3 dataset?

- The dataset includes raw data, metadata for context, analytical tools, and comprehensive documentation to assist in its interpretation.

- How is the 1-s2.0-s1097276523004665-mmc3 dataset used in research?

- Researchers use this dataset for analyzing complex phenomena, validating hypotheses, identifying patterns, and driving innovation in various scientific fields.

- What tools are required to analyze this dataset?

- Tools such as Python, along with libraries like NumPy, Pandas, Matplotlib, Seaborn, and Scikit-learn, are essential for effective data analysis and visualization.

- What are some common analysis techniques used with the dataset?

- Statistical techniques like t-tests, ANOVA, and machine learning methods like supervised learning (e.g., SVM) and unsupervised learning (e.g., clustering) are commonly used to analyze the data.

- Why is data preprocessing important?

- Data preprocessing ensures that the data is clean, transformed, and in the right format for analysis. Steps like data cleaning, feature engineering, and addressing imbalances improve the accuracy and reliability of the analysis.

- What is the role of data visualization in interpreting the dataset?

- Data visualization helps in uncovering patterns and relationships within the data, making it easier for researchers to interpret the results and make informed decisions.

For more Information About information visit Shortthink

Leave a Reply